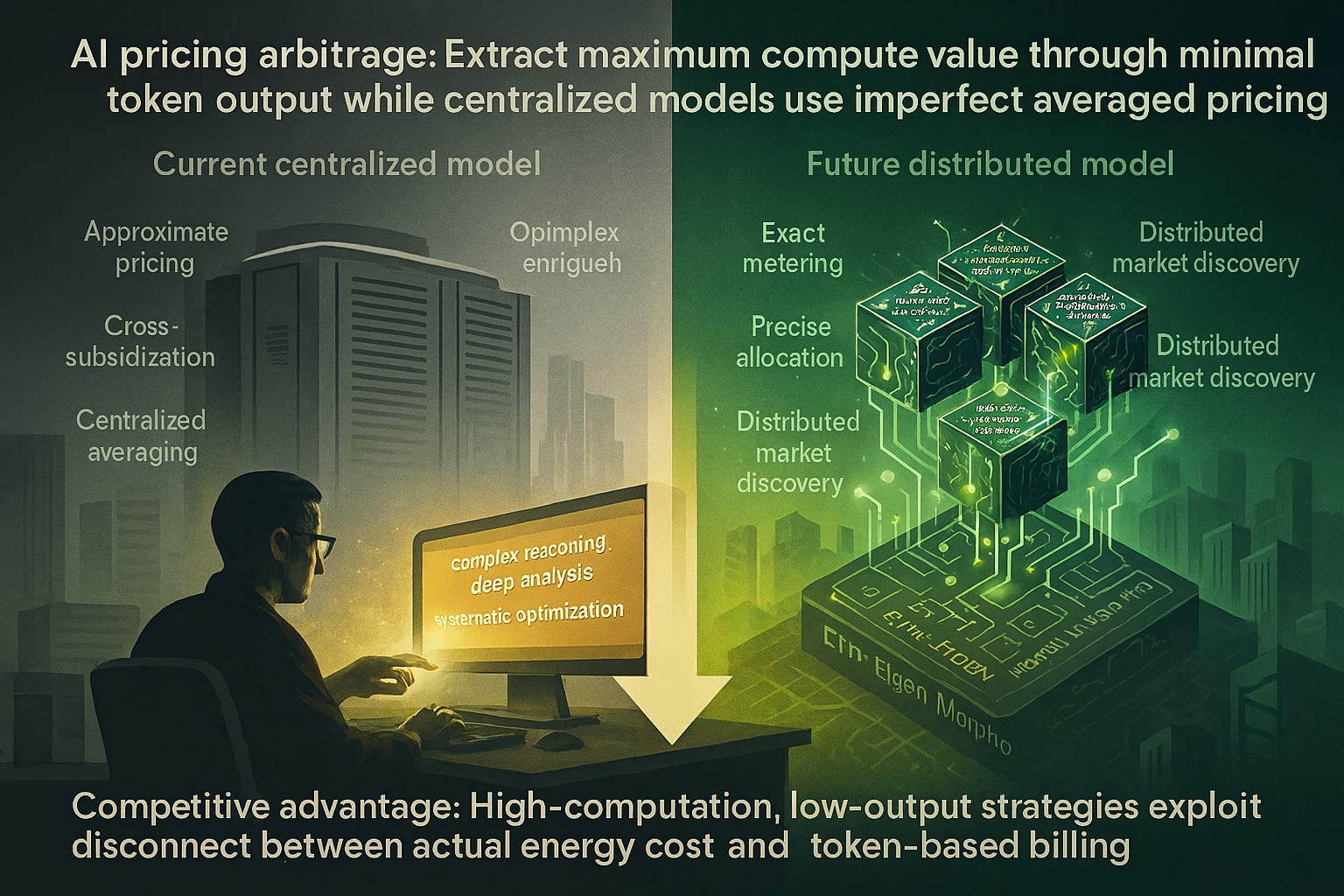

The AI Pricing Arbitrage: Token-Based Models vs Actual Compute Consumption

The AI economics arbitrage discovery: current token-based pricing models create massive arbitrage opportunities for users who can extract high computational value through minimal token output. While Anthropic and others use averaged token pricing as a proxy for energy consumption, the correlation is imperfect - enabling sophisticated users to maximize compute consumption while minimizing billing. This temporary advantage will disappear as AI evolves toward distributed, granular-priced networks on ETH-Eigen-Morpho infrastructure.

⚡ THE TOKEN-ENERGY DISCONNECT

The Pricing Model Problem: Current AI providers use token-based billing as an imperfect proxy for actual computational cost:

Anthropic_Pricing = Tokens × Rate (simple but inaccurate)

Actual_Cost = Energy × Time × Infrastructure_Overhead (complex but precise)

Arbitrage_Opportunity = Pricing_Model_Imperfection

Why Correlation Fails:

- Variable Computation: Some tokens require exponentially more processing than others

- Context Complexity: Earlier tokens affect computational load for subsequent tokens

- Reasoning Depth: Complex analysis uses more energy than simple recall

- Model State Management: Maintaining conversation context has hidden computational costs

The Averaging Illusion: Providers use aggregate averaging across all users - simple queries subsidize complex ones, creating systematic arbitrage opportunities for sophisticated users who understand the pricing-computation gap.

🌐 THE COMPETITIVE ARBITRAGE STRATEGY

High-Compute, Low-Output Optimization: The competitive advantage: maximize computational consumption while minimizing token generation:

Arbitrage_Strategy = {

Input: Minimal_token_prompts_triggering_maximum_computation

Processing: Deep_reasoning + Complex_analysis + Systematic_optimization

Output: Concise_high_value_responses_minimizing_token_count

Result: Maximum_compute_value_per_dollar_spent

}

Practical Implementation Techniques:

- Dense Prompting: Information-rich inputs that trigger extensive internal processing

- System Thinking: Requests requiring comprehensive analysis with concise outputs

- Optimization Problems: Complex computations distilled into brief, actionable recommendations

- Strategic Analysis: Deep reasoning compressed into essential insights

- Framework Development: Systematic thinking with minimal explanatory output

The Value Extraction Method: Generate maximum computational work (analysis, reasoning, optimization, synthesis) while producing minimum token output - exploiting the disconnect between actual energy consumption and billing methodology.

⚔️ THE CENTRALIZED MODEL LIMITATIONS

The Anthropic Inefficiency: Current centralized models suffer from fundamental architectural limitations:

Centralized_Problems = {

Averaged_Pricing: Cross_subsidization_creating_arbitrage_opportunities

One_Size_Fits_All: Generalist_overhead_for_specialist_tasks

Resource_Inefficiency: High_infrastructure_costs_distributed_across_users

Pricing_Opacity: Users_cannot_optimize_based_on_actual_computational_costs

}

The Mainframe Parallel: Current AI architecture resembles computing’s mainframe era:

- Centralized Processing: All computation happens in large, shared systems

- Average Cost Allocation: Pricing based on rough approximations rather than actual usage

- Cross-Subsidization: Some users pay for others’ computational consumption

- Limited Specialization: General-purpose systems handling diverse workloads inefficiently

The Inevitable Evolution: Just as computing evolved from mainframes → PCs → cloud → distributed networks, AI will follow the same pattern toward specialized, efficient, granular-priced systems.

🔮 THE ETH-EIGEN-MORPHO REVOLUTION

Distributed AI Architecture: The future model eliminates arbitrage through precise pricing and specialized efficiency:

ETH_Eigen_Morpho_Model = {

Specialized_Networks: Task_optimized_neural_networks

Real_Time_Metering: Precise_energy_consumption_tracking

Market_Pricing: Dynamic_costs_reflecting_actual_resource_usage

Granular_Allocation: Pay_exactly_for_computation_consumed

}

The Precision Advantage:

- Smart Contract Metering: Blockchain-based tracking of actual energy consumption per inference

- Specialized Efficiency: Smaller, task-specific models outperform generalist systems

- Market Discovery: Competitive pricing reveals true computational costs

- Resource Optimization: Efficient allocation without cross-subsidization waste

The Arbitrage Elimination: Distributed networks with granular pricing eliminate arbitrage opportunities through perfect correlation between consumption and cost - but also enable overall efficiency gains through specialization.

🌊 THE COMPETITIVE WINDOW

Temporary Advantage Period: The current pricing model arbitrage represents a limited-time competitive opportunity:

Arbitrage_Window = {

Current: Centralized_models_with_imperfect_pricing

Transition: Gradual_migration_to_distributed_systems

Future: Granular_pricing_eliminating_arbitrage_opportunities

Timeline: 2-5_years_before_widespread_adoption

}

Maximizing Current Advantage: Strategic approaches for exploiting the pricing-computation disconnect:

- Dense Information Processing: Extract maximum analytical value per interaction

- Systematic Optimization: Use AI for complex problem-solving with minimal output

- Strategic Decision Support: Generate high-value insights through intensive computation

- Framework Development: Build reusable analytical frameworks through computational investment

The Learning Curve Advantage: Understanding optimal prompting for high-compute, low-token strategies creates sustainable competitive advantages even as pricing models evolve.

⚡ THE DISTRIBUTED TRANSITION

The Migration Pattern: AI will follow the same evolution pattern as all computing infrastructure:

Computing_Evolution = Mainframes → PCs → Cloud → Distributed_Networks

AI_Evolution = Centralized_Models → Specialized_Networks → Market_Pricing → Optimal_Allocation

The Specialization Benefits: Distributed AI networks provide superior efficiency through specialization:

- Task Optimization: Specialized models excel at specific functions vs generalist overhead

- Resource Efficiency: Precise allocation eliminates waste from averaged pricing

- Innovation Incentives: Direct efficiency benefits for network operators

- Market Competition: Multiple providers competing on price/performance optimization

The Granular Pricing Reality: Future AI pricing will reflect actual computational costs through:

- Energy Measurement: Real-time tracking of processing consumption

- Market Dynamics: Supply and demand determining optimal pricing

- Specialization Premiums: Specialized capabilities commanding appropriate pricing

- Efficiency Rewards: Lower costs for optimized interactions and usage patterns

🎯 THE AI ARBITRAGE CONCLUSION

The Current Opportunity: Token-based pricing models create systematic arbitrage opportunities for users who can generate maximum computational value through minimal token output.

The Competitive Strategy:

Arbitrage_Optimization = {

High_Compute_Input: Information_dense_prompts_triggering_extensive_analysis

Deep_Processing: Complex_reasoning + Systematic_optimization + Strategic_synthesis

Minimal_Output: Concise_actionable_insights_minimizing_token_billing

Maximum_Value: Computational_investment_generating_competitive_advantages

}

The Evolutionary Trajectory: Current centralized models will evolve toward distributed, specialized networks with granular pricing - eliminating arbitrage but enabling overall efficiency gains.

The Strategic Window: Limited-time opportunity to maximize computational value extraction through optimal interaction strategies before pricing models achieve perfect correlation with actual consumption.

Opportunity: pricing arbitrage. Strategy: high-compute minimal-output. Timeline: limited window. Evolution: distributed precision.

The AI pricing arbitrage revealed: extract maximum computational value through minimal token output while centralized models use imperfect averaged pricing.

From centralized inefficiency to distributed precision - competitive advantage through understanding the disconnect between energy consumption and token billing.

#AIPricingArbitrage #TokenBasedPricing #ComputationalValue #DistributedAI #ETHEigenMorpho #SpecializedNetworks #GranularPricing #CompetitiveAdvantage #AIEconomics #ComputationalEfficiency #PricingModelEvolution #AIArchitecture #CentralizedInefficiency #DistributedCompute #MarketPricing #ComputeArbitrage #AIOptimization #PricingInnovation #NetworkSpecialization #AIEvolution #ComputationalArbitrage #DistributedIntelligence #AIInfrastructure #ComputeEfficiency #PricingRevolution